Abstract

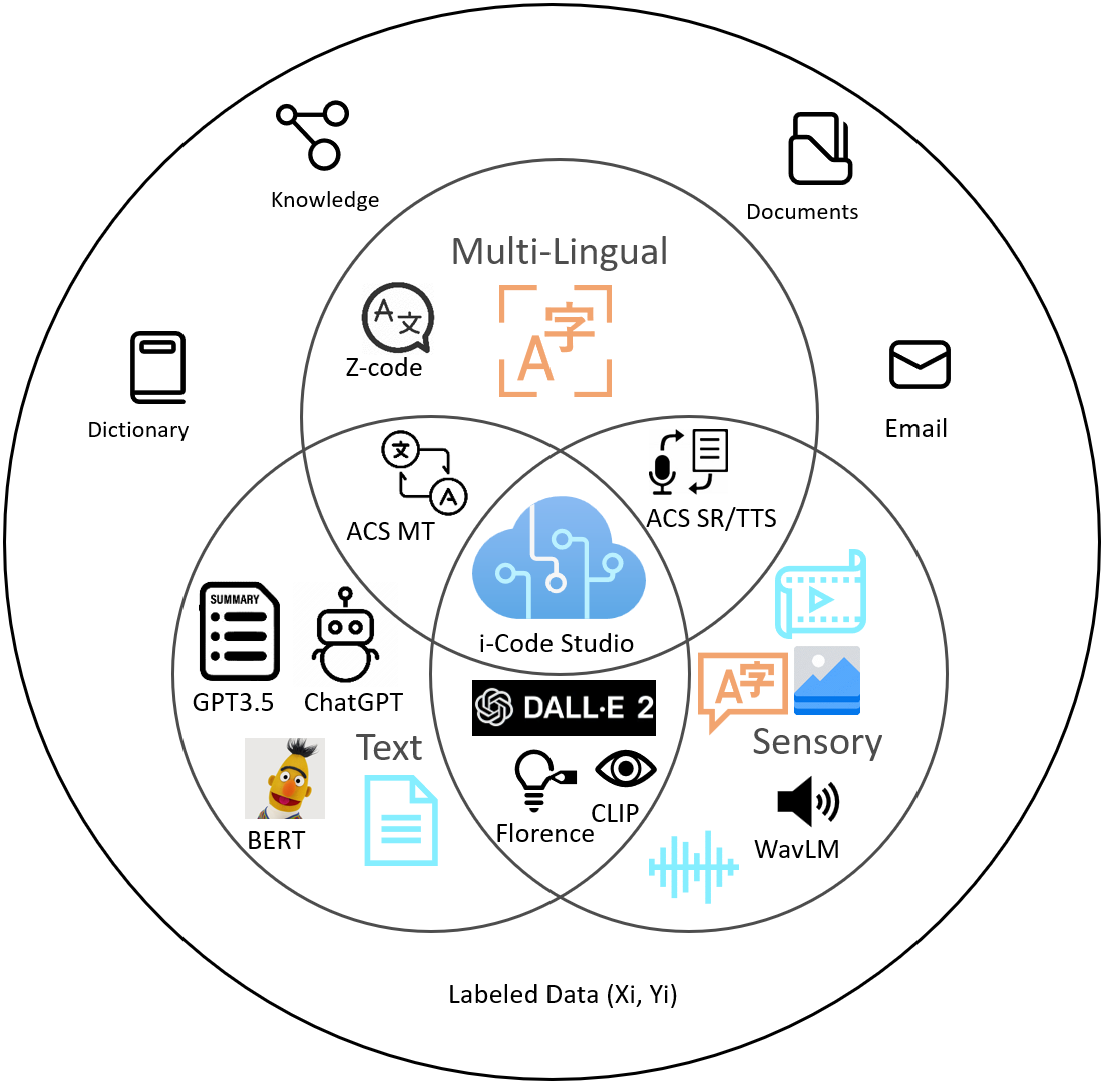

Artificial General Intelligence (AGI) requires comprehensive understanding and generation capabilities for a variety of tasks spanning different modalities and functionalities. Integrative AI is one important direction to approach AGI, through combining multiple models to tackle complex multimodal tasks. However, there is a lack of a flexible and composable platform to facilitate efficient and effective model composition and coordination.

In this paper, we propose the i-Code Studio, a configurable and composable framework for Integrative AI. The i-Code Studio orchestrates multiple pre-trained models in a finetuning-free fashion to conduct complex multimodal tasks. Instead of simple model composition, the i-Code Studio provides an integrative, flexible, and composable setting for developers to quickly and easily compose cutting-edge services and technologies tailored to their specific requirements. The i-Code Studio achieves impressive results on a variety of zero-shot multimodal tasks, such as video-to-text retrieval, speech-to-speech translation, and visual question answering. We also demonstrate how to quickly build a multimodal agent based on the i-Code Studio that can communicate and personalize for users.

Architecture

Multimodal Virtual Live Demo

We use i-Code Studio to build a multimodal live demo that can create virtual experience for users. Specifically, we use Azure Vision services to interpret video; use Azure Summarization services to summarize video; use Azure Avatar to generate talking head.

Multimodal Assistant Demo

We utilize i-Code Studio to build a multimodal agent that can communicate and personalize for users. Specifically, the eyes of the agent use Azure Vision services to interpret visual images signals and send signals to the brain; the ears and mouth use Azure Speech services to collect sound waves and produce sounds; the brain leverage Azure OpenAI services to integrate all the sensory signals received from the eyes, ears and uses them to make decisions.